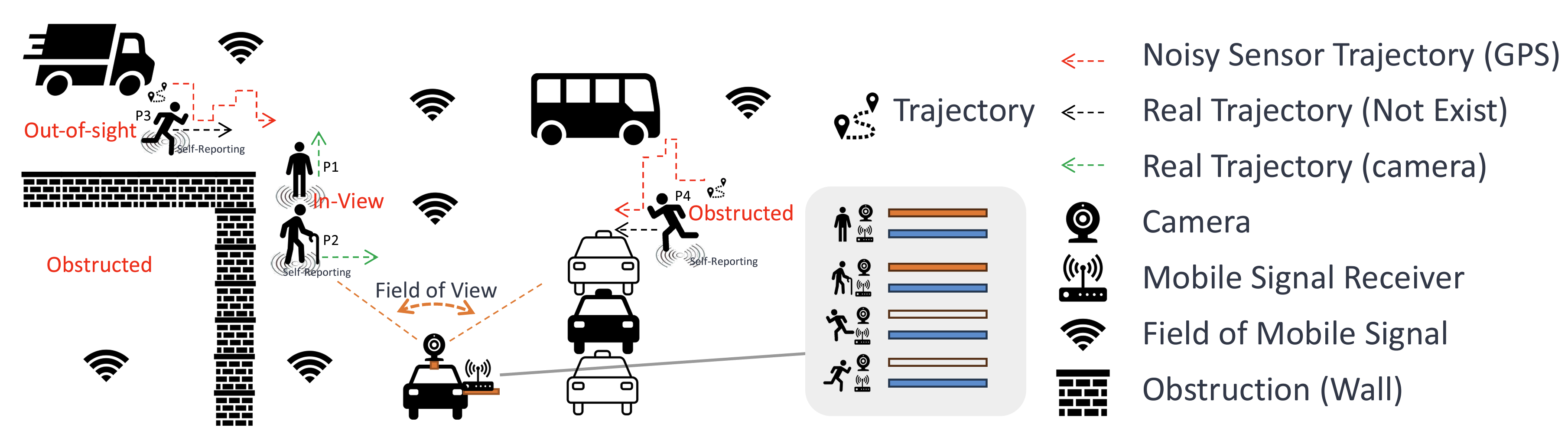

A representative illustration of real-world out-of-sight scenarios in autonomous driving. The autonomous vehicle is equipped with a camera (capturing precise visual trajectories, indicated by green dotted arrows) and a mobile signal receiver (capturing noisy sensor trajectories, represented by red dotted arrows) for tracking pedestrians and other vehicles. Pedestrians P1 and P2 are within the camera's field of view, while P3 is entirely out of sight and P4 is obscured by other vehicles. Consequently, P3 and P4 lack captured visual trajectories and are positioned dangerously, potentially crossing into the vehicle's path, posing a risk of collision. The black dotted arrows depict the hypothesized noise-free real trajectories, ideally captured by mobile sensors, contrasting with the actual noisy sensor trajectories (red arrows). The gray area in the figure demarcates the visibility range of the mobile and visual modalities: white indicates no data captured, orange signifies the presence of visual trajectories, and blue represents the availability of mobile trajectories.