|

|

|

|

|

|

|

|

|

|

|

(coming soon) |

|

|

|

|

|

|

|

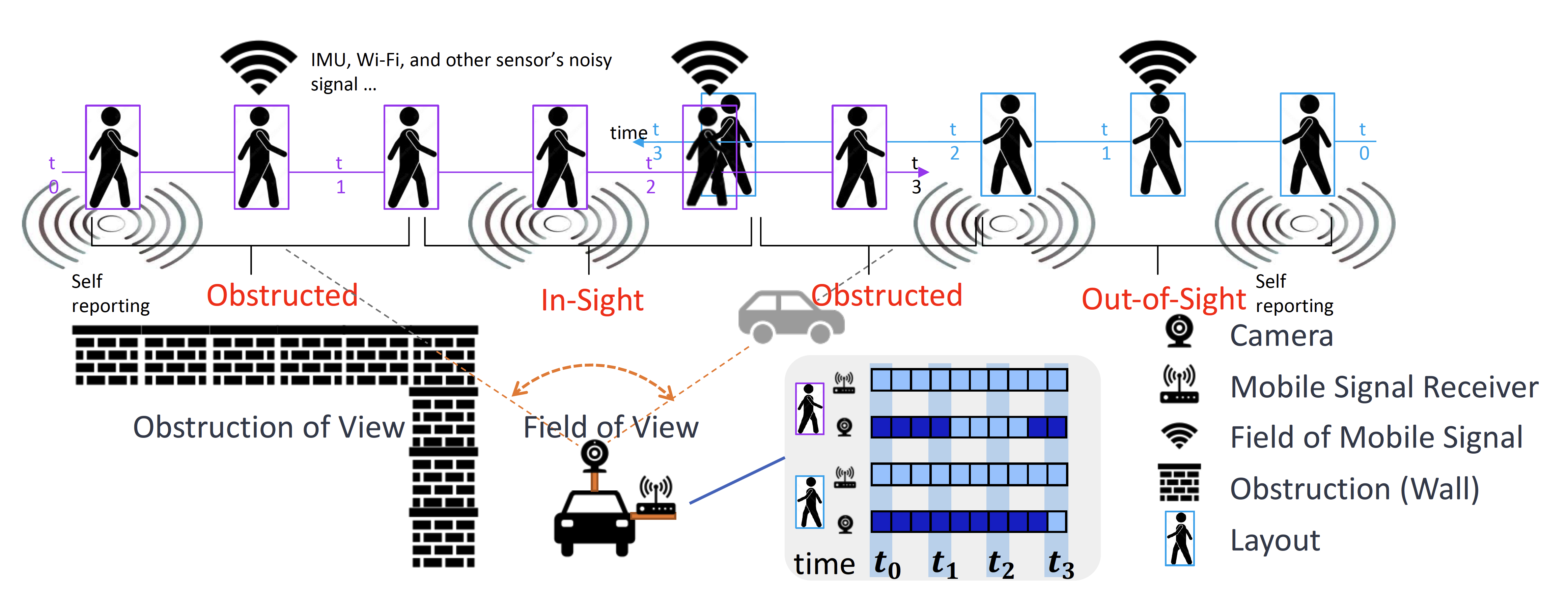

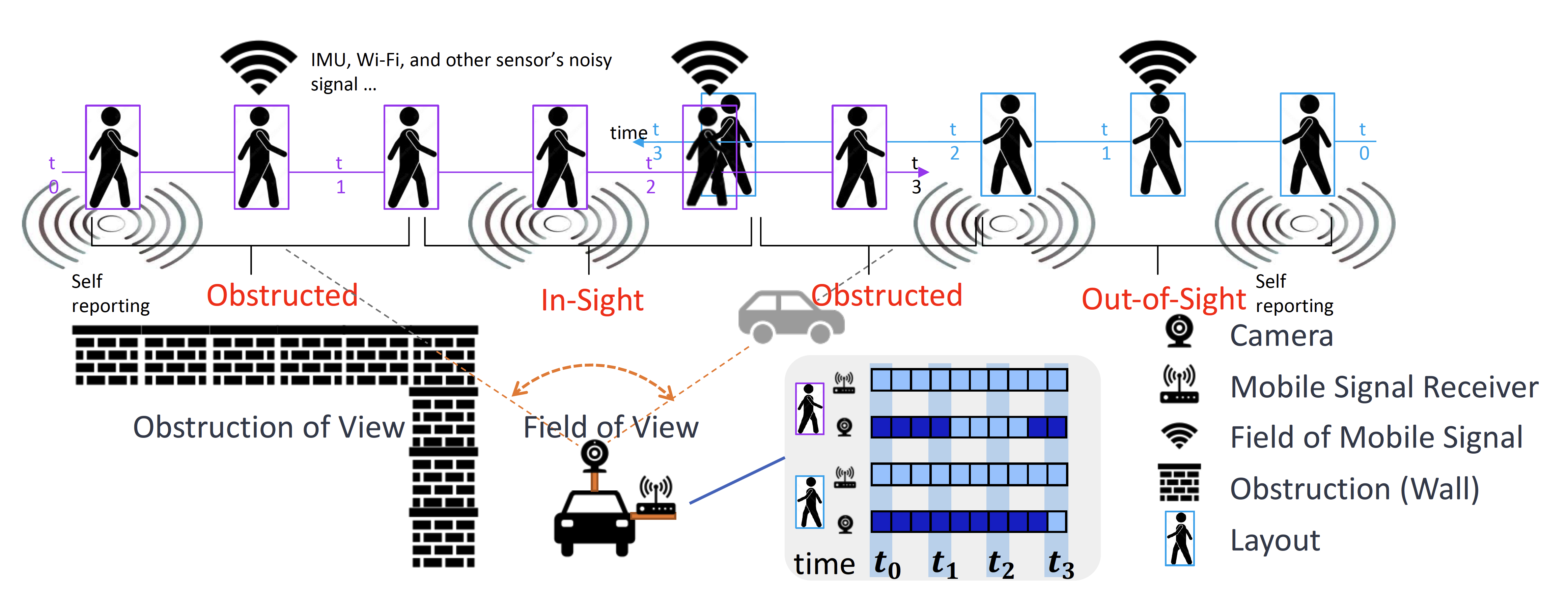

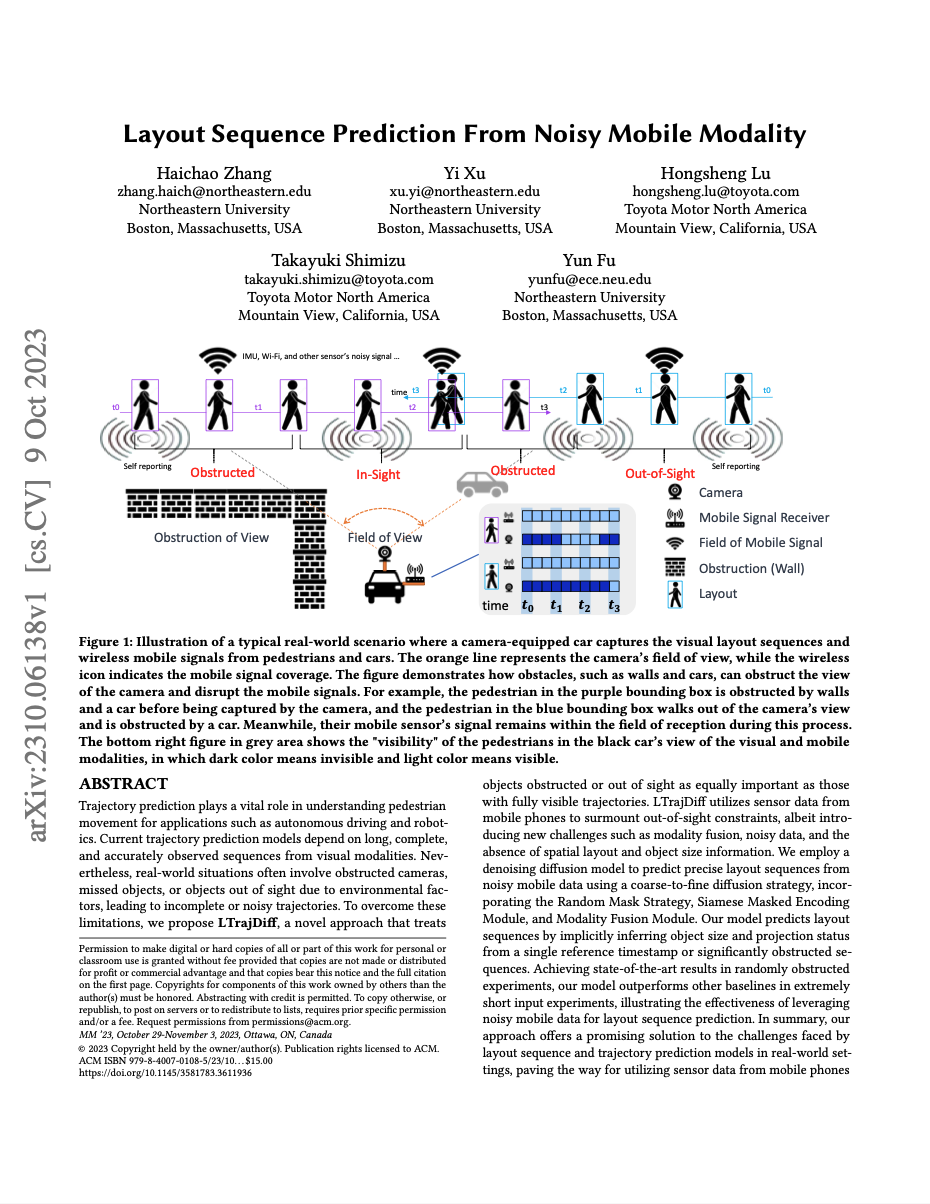

Haichao Zhang, Yi Xu, Hongsheng Lu, Takayuki Shimizu, Yun Fu Layout Sequence Prediction From Noisy Mobile Modality ACM MM, 2023 (Paper) |

|

|

Two-minute papers

|